How would we know if market were "AGI" pilled?

During Meta 2Q’25 call, Mark Zuckerberg mentioned something potentially quite profound:

“Over the last few months, we’ve begun to see glimpses of our AI systems improving themselves. And the improvement is slow for now, but undeniable”

Then in Meta 3Q’25 call, he described Meta’s Family of Apps (FOA) business in a way I have never previously heard him depict it in this manner. From the call:

This quarter, we saw meaningful advances from unifying different models into simpler, more general models, which drive both better performance and efficiency. And now the annual run rate going through our completely end-to-end AI-powered ad tools has passed $60 billion. And one way that I think about our company overall is that there are 3 giant transformers that run Facebook, Instagram and ads recommendations. We have a very strong pipeline of lots of ways to improve these models by incorporating new AI advances and capabilities.

And at the same time, we were also working on combining these 3 major AI systems into a single unified AI system that will effectively run our family of apps and business using increasing intelligence to improve the trillions of recommendations that we’ll make for people every day.

If you connect these two excerpts from Meta’s last two earnings calls, is it ridiculous to think a growing percentage of Meta’s future unified model improvements will actually come from the AI systems themselves? Is it too reach to imagine that in the next 5-10 years, almost all of the model improvements will eventually come from the AI systems? Of course, the better the model gets, the better the content and ad recommendations will be and higher Meta’s revenue will be (assuming people still have jobs then; only half-kidding!)

If in 5 years, almost all the incremental revenue growth starts to come from improvement in Meta’s models that are also initiated/originated by the AI systems themselves, it is hard to imagine Meta’s incremental headcount need. At the very least, there should be a massive reallocation of headcount resources over time in these big tech companies. Meta’s Super Intelligence team might be just a glimpse of what is about to come in big tech companies for the next decade. If most skills become commodities, the price for bottleneck resources is expected to skyrocket which is what happened with AI researchers in the last couple of years. Of course, the bottleneck resources or skillset can also evolve over time dramatically. Notice OpenAI’s blog post published last week:

“Although AI systems are still spikey and face serious weaknesses, systems that can solve such hard problems seem more like 80% of the way to an AI researcher than 20% of the way. The gap between how most people are using AI and what AI is presently capable of is immense.”

It is certainly possible that today’s bottlenecks will prove to be rather short-lived as companies increasingly learn to utilize the full extent of AI’s (current and future) capabilities. Sam Altman in a recent interview with Tyler Cowen tried to highlight some things that are relatively under-discussed:

People talk a lot about the recursive self-improvement loop for AI research, where AI can help researchers, maybe today, write code faster, eventually do automated research, and this thing is well understood, very much discussed. Very little discussed or relatively little discussed are the hardware implications of this: robots that can build other robots, data centers that can build other data centers, chips that can design their own next generation. There’s many hard parts, but maybe a lot of them can get much easier. Maybe the problem of chip design will turn out to be a very good problem for previous generations of chips.

Sam Altman doesn’t have any option other than being a fund raising machine, so we may need to take everything he says with a grain of salt. Nonetheless, Altman does hint at something that I also wonder about.

A good chunk of Nvidia’s moat comes from CUDA (Compute Unified Device Architecture) which is a parallel computing platform and programming model that has created immense developer lock-in. CUDA exists to bridge the gap between human programmers and the complex architecture of the GPU. As AI systems become capable over time, AI should not require human-friendly abstraction layers, SDKs, or documentation. It could theoretically look at any piece of hardware i.e. an Nvidia GPU, a Google TPU, or a novel architecture it just designed and write perfectly optimized machine code for it. If humans are increasingly out of the loop, shouldn’t the friction that keeps developers locked into CUDA also materially diminish over time?

In fact, the very idea of a picks and shovels company being the largest company in the world should perhaps create cognitive dissonance to AI bulls. Picks and shovels are immensely valuable when you have no idea where exactly the gold can be found, and yet, there is an army of people rushing to dig for gold anyway. However, if there are only a handful of companies left to dig gold and one or two consistently start finding gold in higher quantity, they may eventually try to abstract away picks and shovels and vertically integrate the entire gold digging process.

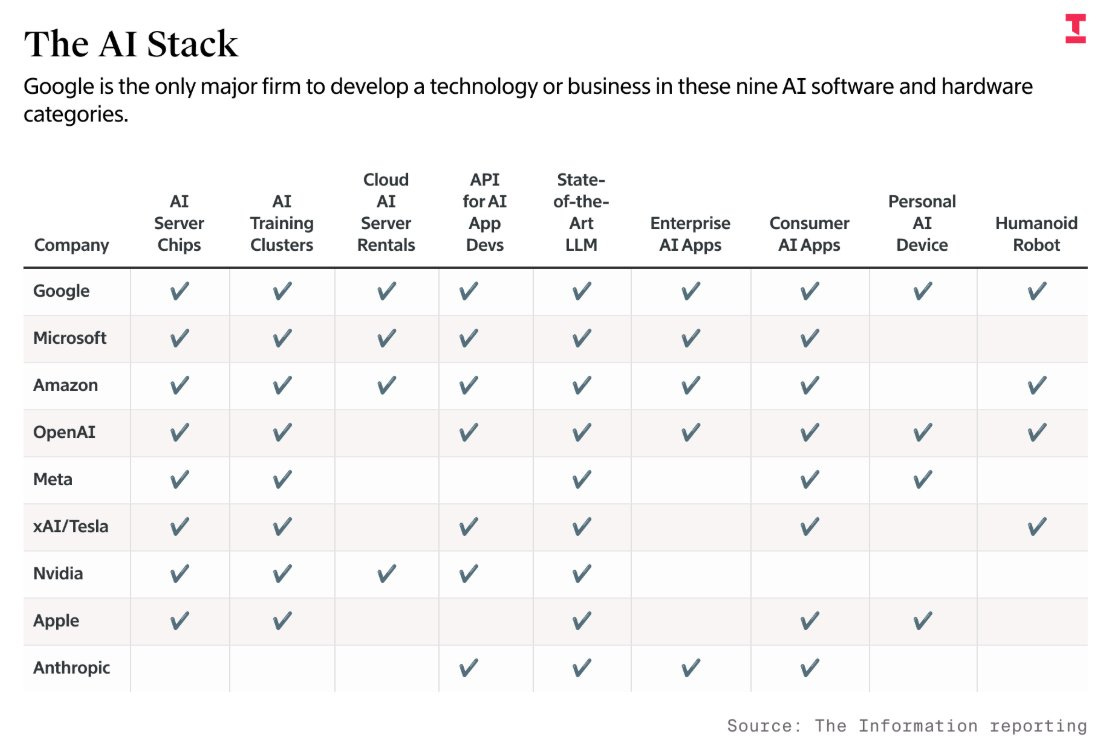

The company who has already made this bet is Alphabet. They have been vertically integrated and have a full-stack approach to AI that its nearest competitors are trying to replicate as soon as possible.

Among the major players in AI value chain, I think until recently Jensen Huang used to be far from “AGI” pilled. If you truly believed in “AGI”, would you sell your chip designs to third parties as much as possible instead of trying to build your own lab to develop the model itself? I mentioned “until recently” because Huang did seem to be getting “AGI” pilled as Nvidia is now investing in OpenAI.

I have always wondered one of the biggest question in big tech land in the next 3-4 years is what Nvidia will do with its expected ~$817 Billion cumulative Free Cash Flow (FCF) in the next four years (consensus estimates between FY2027 and FY2030)? Nvidia seems to have a bitter relationship with Anthropic; it wouldn’t surprise me if he distributes a good chunk (majority?) of this cumulative FCF to OpenAI and xAI.

In hindsight, Alphabet actually appears much more “AGI” pilled than most companies; selling TPU to others stop making sense if you think there is a viable path to “AGI”. I know there are strong murmurs of evolution of such a strategy, but I will wait to see who exactly they’re selling these TPUs to before inferring too much. If it’s other model developers such as Anthropic or SSI (who has the least pragmatic approach but by far the most “AGI” pilled), it would still indicate Alphabet is still quite “AGI” pilled and they’re just hedging their bets around a bit.

With a full-stack approach to AI (and some hedges through Anthropic ownership), Alphabet will likely be the most valuable company in the world if market were getting increasingly “AGI” pilled. I suspect that may still not be enough to infer confidently that market is getting “AGI” pilled especially since Alphabet is already also the most profitable company in the world. However, if OpenAI and Anthropic IPOs and either of them enjoys a persistent higher market cap than some of the big tech companies (e.g. Meta), you could say market is warming up to the idea that developing the best model itself will ultimately be the best business, near-term earnings and cash flows be damned!

Elon Musk, Sam Altman, Mark Zuckerberg, Demis Hassabis+ Sundar Pichai…I suspect all of them are higher on the scale of being “AGI” pilled than their shareholders today (okay, maybe OpenAI’s investors are more than others). If most investors get over time closer to where these CEOs probably are today, especially if more evidence of recursive self-improving models emerge in the next couple of years, I would like to own more Alphabet in such a world which should explain my decision to add more to Alphabet recently. While the stock isn’t “cheap” anymore, we don’t quite need to be “AGI” pilled to see Alphabet’s potential either.

As I have mentioned earlier, I believe “AGI” is not a moment in time, rather an asymptote to which we may perennially march towards, but just as we didn’t make any audible gasp when Turing test was passed, our days may seem surprisingly similar as we get ever closer to “AGI”.

In addition to “Daily Dose” (yes, DAILY) like this, MBI Deep Dives publishes one Deep Dive on a publicly listed company every month. You can find all the 64 Deep Dives here.

Current Portfolio:

Please note that these are NOT my recommendation to buy/sell these securities, but just disclosure from my end so that you can assess potential biases that I may have because of my own personal portfolio holdings. Always consider my write-up my personal investing journal and never forget my objectives, risk tolerance, and constraints may have no resemblance to yours.

My current portfolio is disclosed below: