The Ecosystem Advantage

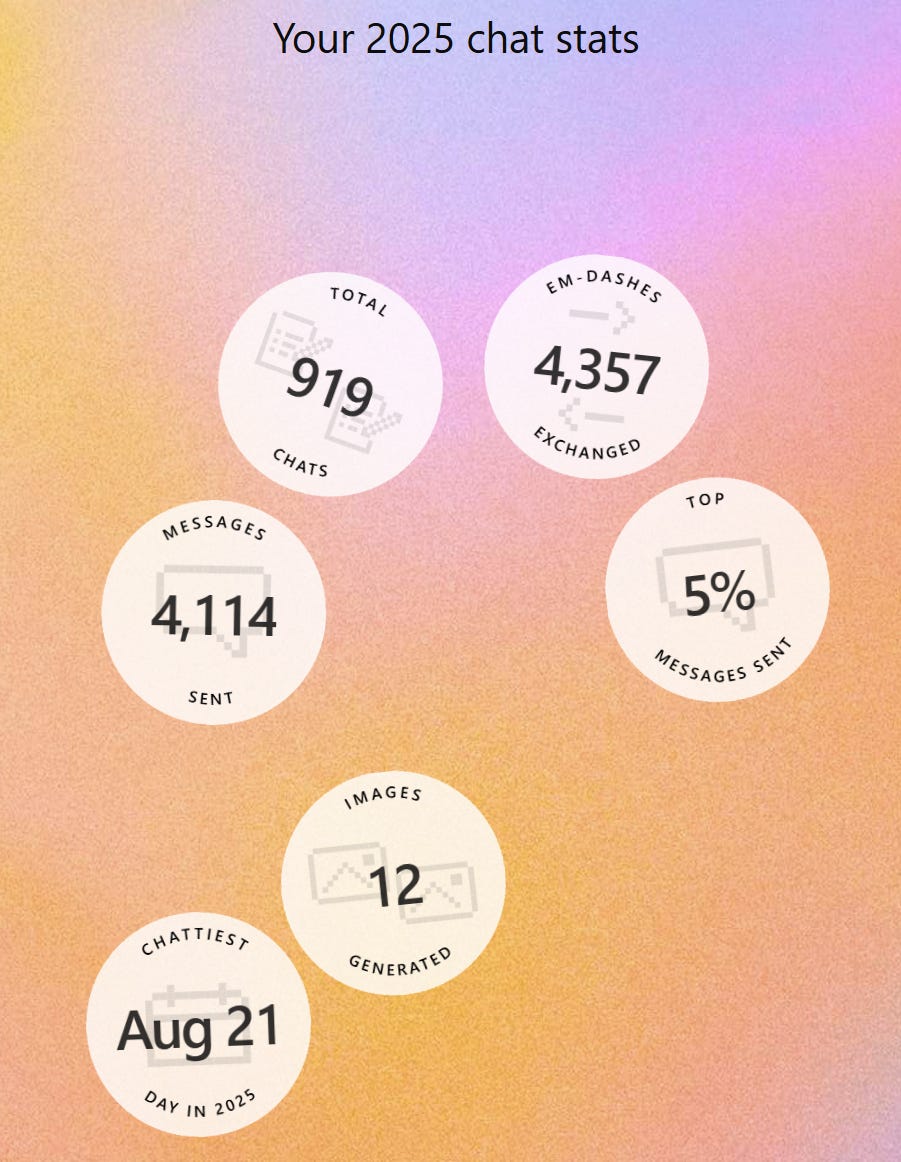

Like “Spotify Wrapped”, ChatGPT yesterday let its users see their 2025 stats which was fun to go through. I almost exclusively use ChatGPT Pro model (with extended thinking) which makes it hard for me to casually chat with ChatGPT as it typically takes 10-20 minutes for each of my queries. Nonetheless, ChatGPT is telling me I was top 5% users in 2025.

I think ChatGPT has such a great product market fit (PMF) for investment, and tech folks that I suspect ~50% of people working in analyst roles or people who needs to code a lot are likely in the top 5% users of ChatGPT this year. In fact, when I shared my stats in a couple of investing group chats I am active in, it turns out everyone else is top 1% users! I will be surprised if my usage doesn’t increase next year; my usage (I think) has gradually increased over the course of 2025.

However, given such an incredible PMF in our particular use cases can also make us susceptible to extrapolate our own experiences to the wider world perhaps a bit too much. One of my friends was wondering if usage keeps increasing on ChatGPT, will there be any person or any company that can get to know you better than ChatGPT can in a few years?

The answer to that question can have profound implications, especially in economic context. But I think the answer depends a lot on who the user is. If you are consistently in the top 1% ChatGPT users for a few years, it is highly likely that ChatGPT will “know and understand” you better than any person or company can, and it is an innate desire for humans to interact with people/things that just “get” them.

But it can remain a pretty uphill task for ChatGPT to “understand” ~90% its users who likely just don’t spend as much time on the app as top 1-5% does. Ultimately, it requires a lot of agency for the user to come up with a question they can ask. If you don’t have a question to ask (or asks one very sparingly), ChatGPT is close to a blank slate in terms of outlining a picture of who you are, and what you may be interested in.

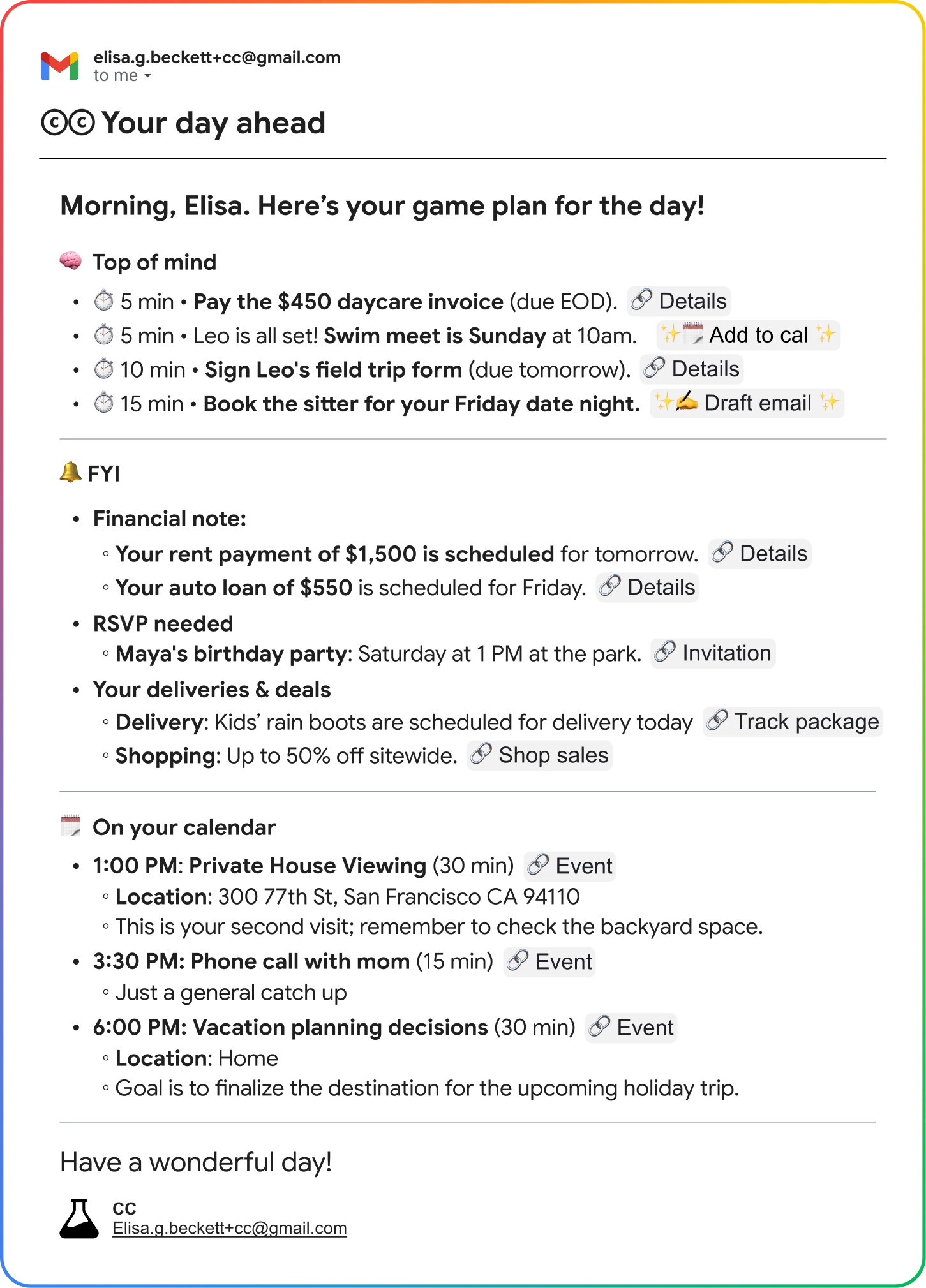

Contrast this with Google who has an entire ecosystem of products to know you better which will likely come pretty handy as they let AI features gather more context and data across the ecosystem to create a holistic picture of its users. Take Google’s new feature called “CC” in Gmail. Google hasn’t released this feature for the wider public yet, but you can join the waitlist. I signed up for this last week, and basically what “CC” does is it goes through all my emails/calendar everyday and then send me one email in the morning that I should pay attention to. On the very first day, it reminded me to update the payroll for the nanny we recently hired for our son. Even if I stop using Google search tomorrow, Google will still have a lot of these handy contexts to make its products more useful for me. I’m not sharing my personal email’s screenshot, but here’s one from Google:

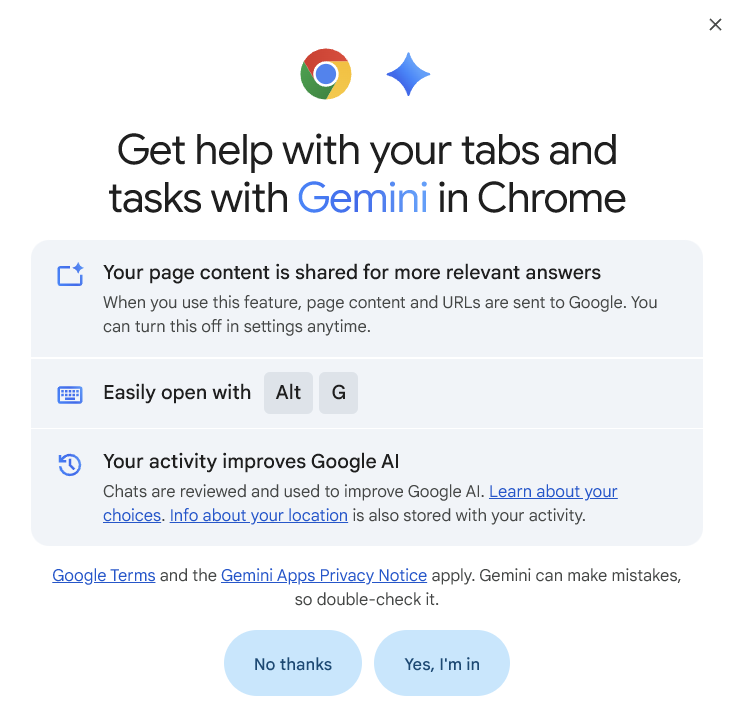

While clicking the Gemini’s diamond icon on top right on my Chrome browser yesterday to summarize a piece I was reading, Google asked me the following. Notice what it says: “when you use this feature, page content and URLs are sent to Google.” Even if I don’t use Google search/Gemini chat, Google will have a pretty clear understanding what I am reading and browsing across the internet.

Then consider YouTube. Recently, I have been going through a lot of YouTube videos to understand the host experience on Airbnb. As we all know, YouTubers are incentivized to lengthen their video to increase watch time and hence almost all the videos about their experience are 10-20 minutes long. I could basically cut down the time spent on these videos by 90% just by clicking “Ask” button on YouTube to get a summary of the video. I could probably go through 20 such videos in 20 minutes instead of just one. I can probably go on half a dozen other Google products to make the same point. As you can see, Gemini is quite useful for me even though Gemini is not my primary chat bot.

The real advantage of Google, however, is its ability to monetize Gemini through ads even though they are not putting ads on the core Gemini chat bots itself. People speculate when Google may insert ads in Gemini; frankly speaking, I will be surprised if they insert ads anytime soon. It is far more strategically advantageous for them to keep core Gemini experience ad free to essentially force ChatGPT to not introduce ads as well. Google already has the widest canvas you can think of monetizing Gemini through ads, a point eloquently made by Eric Seufert in a recent piece:

“whether ads are ever inserted into Google’s Gemini app is largely irrelevant: Gemini already monetizes with ads through the vast surface areas of AI Overviews and AI Mode. And in fact, for that reason, the user base scale of the Gemini app is also mostly inconsequential: Google has consistently applied Gemini to its portfolio of consumer-facing products, like Search, Chrome, and Gmail, in ways that may not directly incorporate advertising but nonetheless support the advertising business model.”

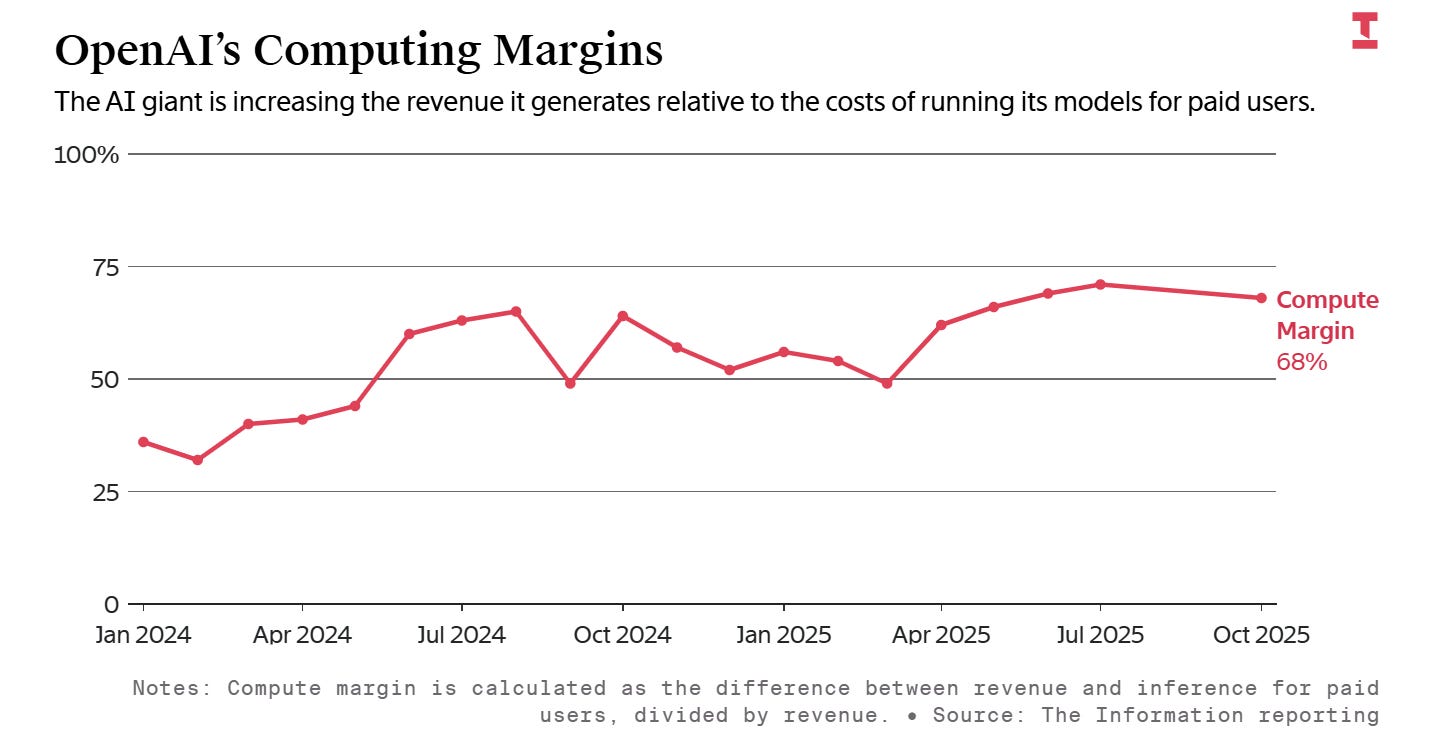

The question of economics is likely to become more front and center over time. The Information recently reported that OpenAI’s computing margin has substantially improved from ~35% in Jan’24 to ~68% in Oct’25. That sounds very impressive, but remember, this is only for paid users which is typically ~5% of OpenAI’s users. The cost associated with the rest ~95% who are using ChatGPT are not considered in this “margin” calculation. For ChatGPT, it is perhaps all but necessary to introduce ads at some point soon, but they know it’s almost certainly going to be bit of a headwind for incremental growth, especially when Gemini chat experience will likely remain ad free. And of course, the question that looms large is the training related costs. Even if you make respectable margins on inference, the question of when can you really get off or at least slow down the treadmill of training the next model is the most uncomfortable question in AI.

A reader recently highlighted a piece on China’s two frontier AI labs: Zhipu, and MiniMax—both of which filed for IPO last week. It’s an interesting piece that I recommend reading and really bears light to the questions I have been alluding here. Now, there are certainly massive differences between these Chinese labs go-to-market and OpenAI's, but the core questions are still valid. Some excerpts from the piece:

“Zhipu’s gross margin sits at 56%. Strong by any standard, especially for a company selling complex enterprise software. MiniMax’s cloud API business generates 69.4% gross margins. These are SaaS-level economics.

Yet Zhipu lost ¥2.47 billion on ¥312 million in revenue last year. The loss is eight times the revenue. MiniMax lost $465 million on $30.5 million in revenue. Fifteen times.

Research and development consumed ¥2.2 billion of Zhipu’s budget in 2024. That’s a 26x increase from the ¥84 million spent in 2022. Within that R&D figure, ¥1.55 billion went directly to compute services. Computing infrastructure alone ate 70% of the entire R&D budget.

MiniMax shows better cost discipline but faces the same fundamental pressure…The ratio of training costs to revenue dropped from 1,365% in 2023 to 266% in the first three quarters of 2025. But even at 266%, you’re spending nearly $3 on training for every $1 of revenue.

This creates the first paradox. At the transaction level, these businesses are profitable. Sell an API call or a subscription, you make money. Scale that up, you should make more money. But scaling requires maintaining competitive model quality. Competitive model quality requires constant compute investment. The compute investment grows faster than revenue…competition determines the required investment level, not customer demand.

Zhipu released six core models in less than three months following R1’s debut. That kind of release cadence doesn’t happen with planned budgets. It happens when competition forces your hand. Each model release requires compute for training, evaluation, and deployment. The costs compound.

MiniMax faced the same pressure despite its efficiency advantages. Being 100x more capital-efficient than OpenAI means nothing when a domestic competitor proves you can do more with even less. The bar keeps rising. The cost of staying relevant keeps climbing.

This reveals what makes the situation structural rather than cyclical. Your strategy becomes irrelevant when competitive dynamics dictate behavior. Zhipu chose scale. MiniMax chose efficiency. DeepSeek’s emergence forced both to spend more regardless of their chosen path.

The competitive advantage has shifted from technical capability to balance sheet depth. Zhipu and MiniMax both demonstrated they can build competitive models. That’s no longer sufficient. The question becomes: can you afford to keep building competitive models quarter after quarter as the bar keeps rising? When DeepSeek forces another iteration cycle, can you write another ¥1 billion check?

Platform companies gain structural advantage because they can sustain losses that would bankrupt independents. ByteDance, Alibaba, and Tencent control compute infrastructure. They own distribution channels. They generate cash from other businesses. A ¥2 billion annual loss is a rounding error in their consolidated P&L statements. They can treat AI model development as a strategic investment, not a profit center that must justify itself quarterly.”

Speaking of ecosystem advantage, let’s go back to Eric Seufert who ended his piece with the same conclusion as well:

“The entirety of Google’s consumer-facing product suite (including YouTube), as well as the Google Cloud Platform, is a distribution conduit for the company’s AI initiatives. This spans multiple billions of consumers, all of which are already monetized through ads. Every dollar Google invests in AI research and development can immediately improve an existing revenue line item.”

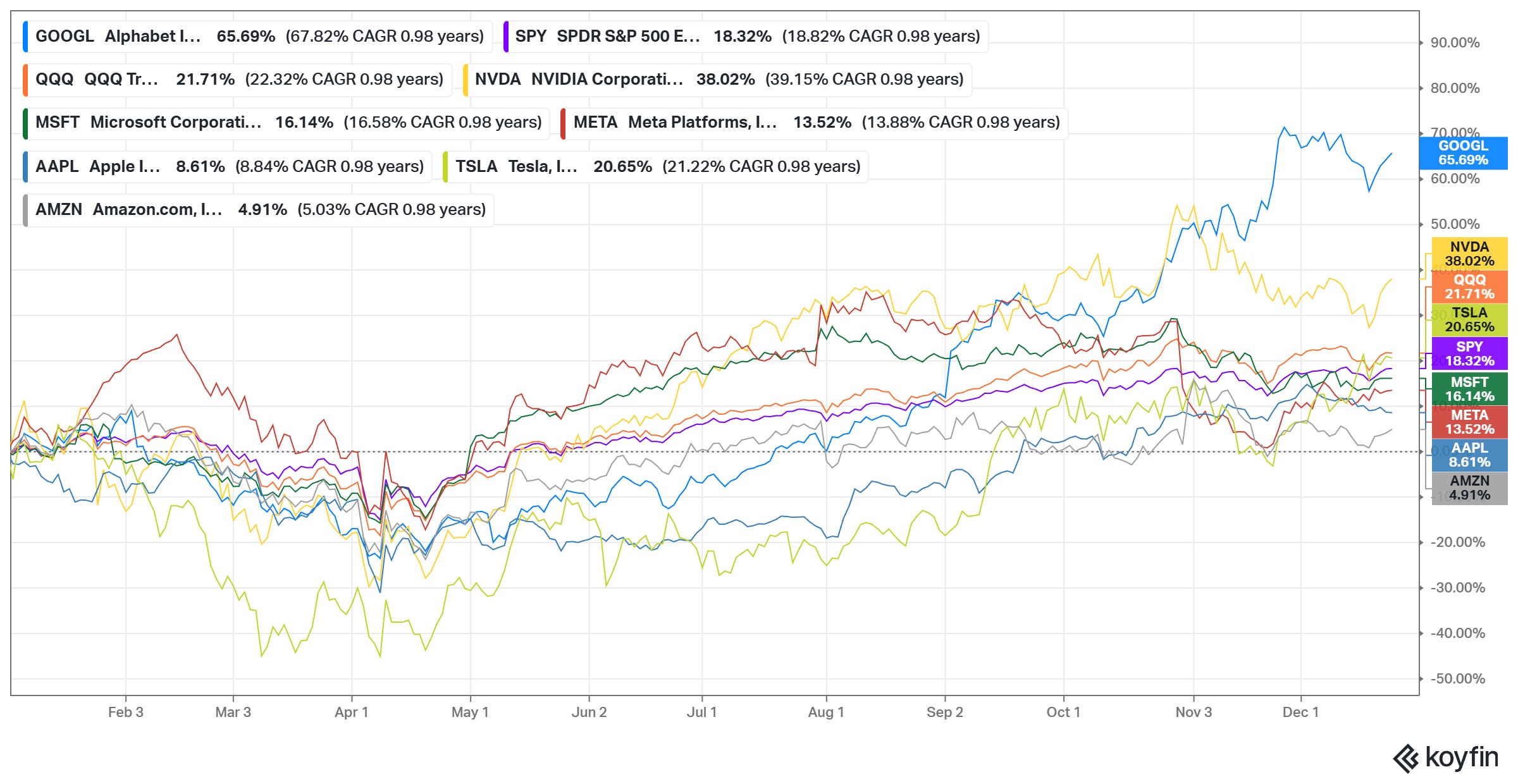

While the questions around Google search seemed existential not so long ago, the market has come to eventually appreciate this ecosystem advantage as Alphabet is fittingly ending 2025 being the best performing megacap tech stock this year!

In addition to “Daily Dose” (yes, DAILY) like this, MBI Deep Dives publishes one Deep Dive on a publicly listed company every month. You can find all the 65 Deep Dives here

Current Portfolio:

Please note that these are NOT my recommendation to buy/sell these securities, but just disclosure from my end so that you can assess potential biases that I may have because of my own personal portfolio holdings. Always consider my write-up my personal investing journal and never forget my objectives, risk tolerance, and constraints may have no resemblance to yours.

My current portfolio is disclosed below: