Meta 2Q'25, CoStar suing Zillow, Portfolio Change

Final Reminder: Prices for new subscribers will increase to $30/month or $250/year from tomorrow. Anyone who joins by July 31, 2025 will keep today’s pricing of $20/month or $200/year.

I have been publishing everyday since the beginning of this month. I hope it is clear by now that it takes a lot of commitment and energy to produce this everyday. I don’t think I have ever been as productive in my life as I was in the last 30 days…publishing something everyday certainly keeps you on your toes! I have been particularly encouraged by how many of you have voted with your wallet so far which only emboldened my confidence in this direction. Thank you very much!

Meta 2Q’25 Update

Wow! That’s what I said to myself a couple of times while going through Meta’s earnings yesterday.

Let me go through the earnings to explain my reaction!

Users

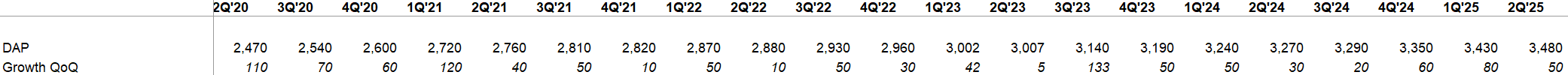

Meta still hasn’t run out of people to add incremental users to one of their apps. I do want to note that they didn’t disclose monthly active users for Threads after disclosing it for the last seven quarters. My guess is MAU growth has noticeably slowed there in the last quarter.

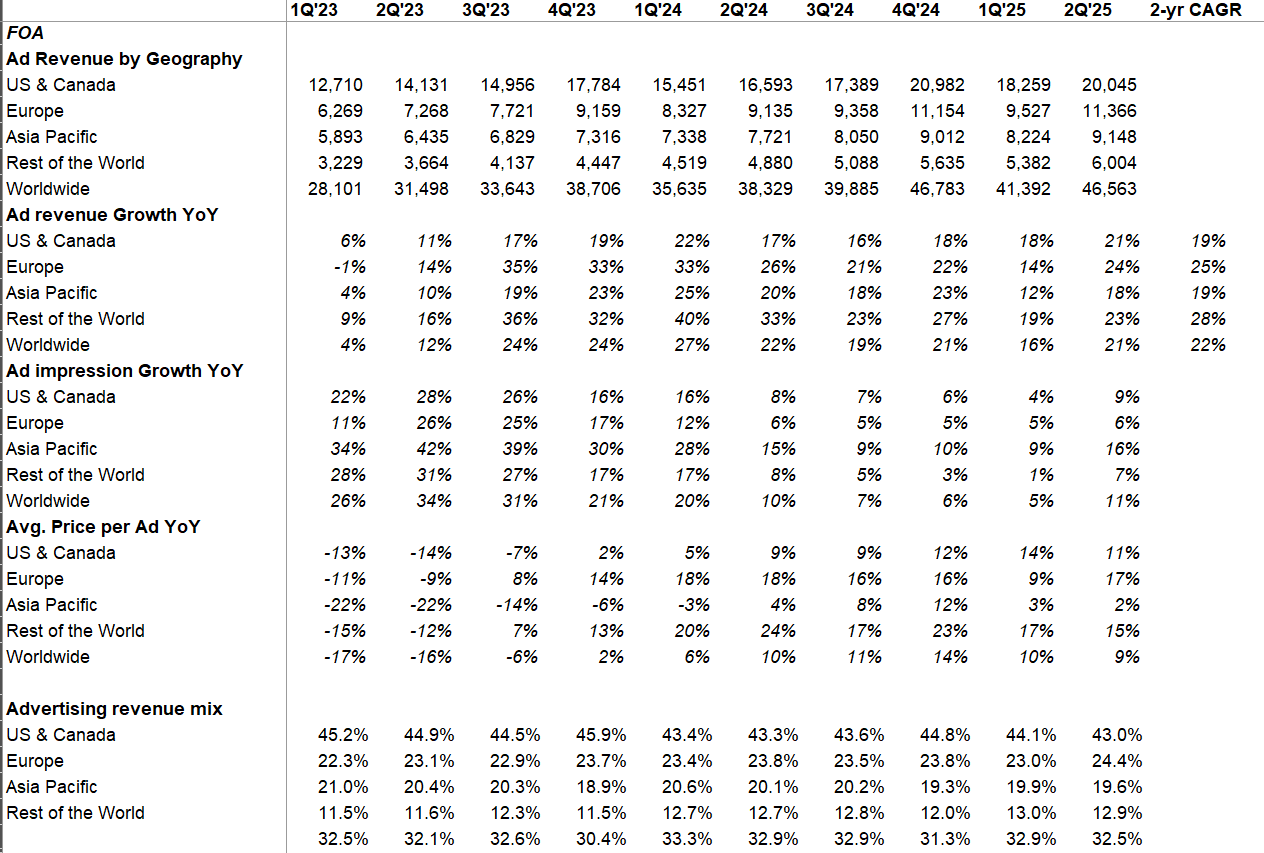

Ad revenue by Geography

After a dip in impression growth in 1Q’25, it accelerated to ~11% YoY in 2Q’25 while also increasing average ad price by ~9%. The combination of these two drove overall ad revenue to grow by ~21% YoY. Growth was pretty indiscriminate across regions.

I am quite encouraged to see the uptick in impression growth; I’m much more confident about Meta’s ability to monetize the impression over time, so growing impression boosts my confidence that there is ample headroom here to scale monetization further as targeting and conversion continues to improve thanks to AI.

Zuck in his prepared remarks did mention that the new AI-powered recommendation model for ads improved its performance by using more signals and longer context which led to 5% more ad conversions on Instagram and 3% on Facebook. These numbers may seem low for casual observers at first glance, but please keep in mind the scale of these platforms; every 1% improvement may unlock ~$1 Billion revenue for Meta!

It’s not just conversion, it’s also helping grow impressions. From Zuck:

“AI is significantly improving our ability to show people content that they're going to find interesting and useful. Advancements in our recommendation systems have improved quality so much that it has led to a 5% increase in time spent on Facebook and 6% on Instagram, just this quarter. There is a lot of potential for content itself to get better too, we're seeing early progress with the launch of our AI video editing tools across Meta AI and our new Edits app.”

CFO Susan Li later also shared additional data points:

We continue to see momentum with video engagement, in particular. In Q2, Instagram video time was up more than 20% year-over-year globally. We're seeing strong traction on Facebook as well, particularly in the U.S., where video time spent similarly expanded more than 20% year-over-year. These gains have been enabled by ongoing optimizations to our ranking systems to better identify the most relevant content to show.

We are also making good progress on our longer-term ranking innovations that we expect will provide the next leg of improvements over the coming years. Our research efforts to develop cross-surface foundation recommendation models continue to progress. We are also seeing promising results from using LLM in Threads recommendation systems. The incorporation of LLMs are now driving a meaningful share of the ranking related time spent gains on Threads.

We're now exploring how to extend the use of LLMs and recommendation systems to our other apps.

So, AI is basically working as a twin tailwind in both side of the business: it increases time spent on the platform through more engaging and relevant content AND it increases conversion for the advertisers. Win-win!

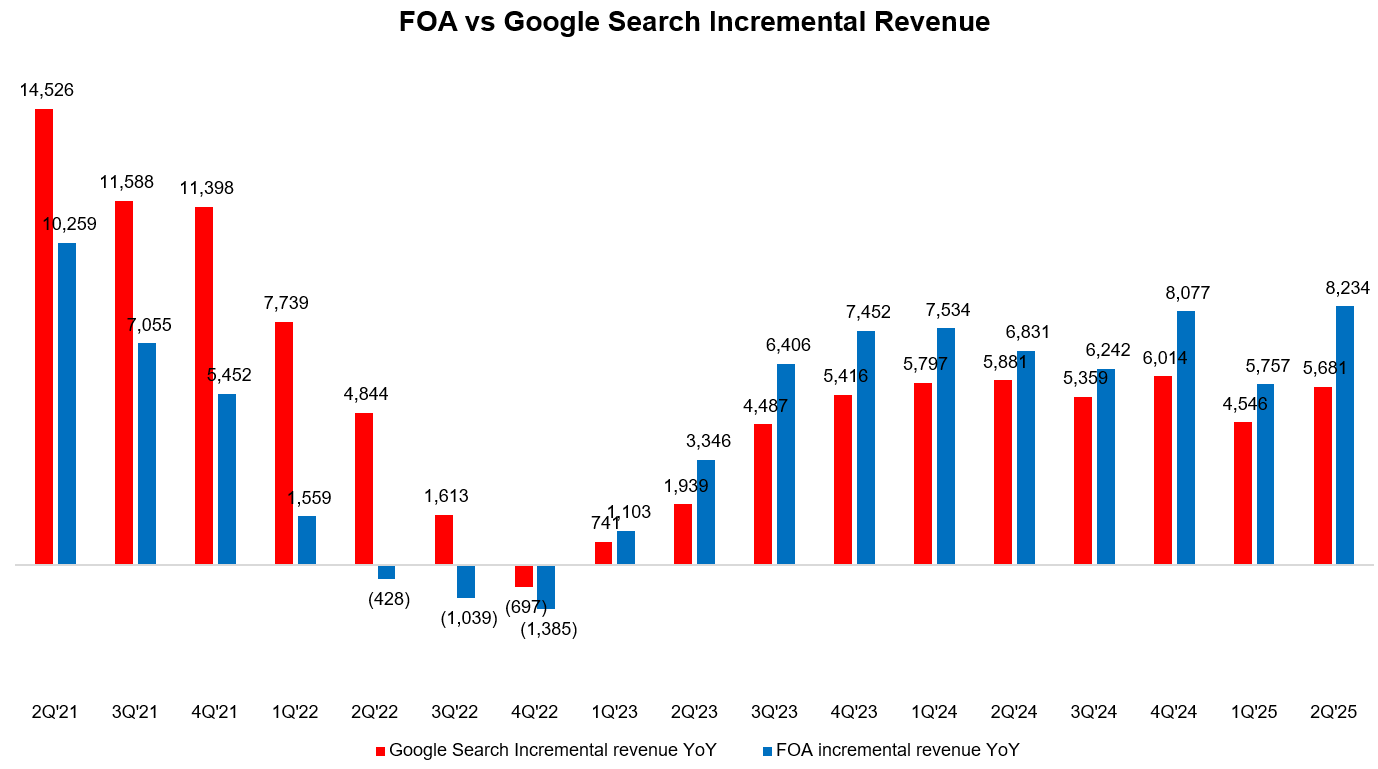

You can perhaps appreciate Meta’s revenue trajectory more when you contrast it with Google Search.

The delta between LTM Google search revenue and LTM FOA revenue was $48.4 Billion in 1Q’23. That delta has narrowed to just $33.7 Billion in just two years. If this continues, it may be in the realm of possibility that Family Of Apps (FOA) revenue may eclipse Google search revenue in the next 5 years!

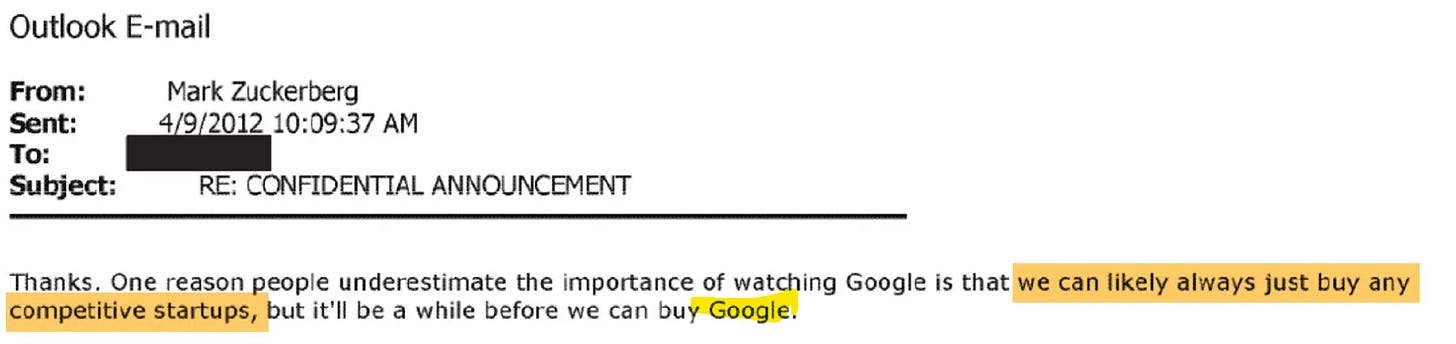

While thinking of the possibility of FOA revenue eclipsing Google Search in 2030 or so reminded me of Zuck’s joke to someone “it’ll be a while before we can buy Google”. 🫡

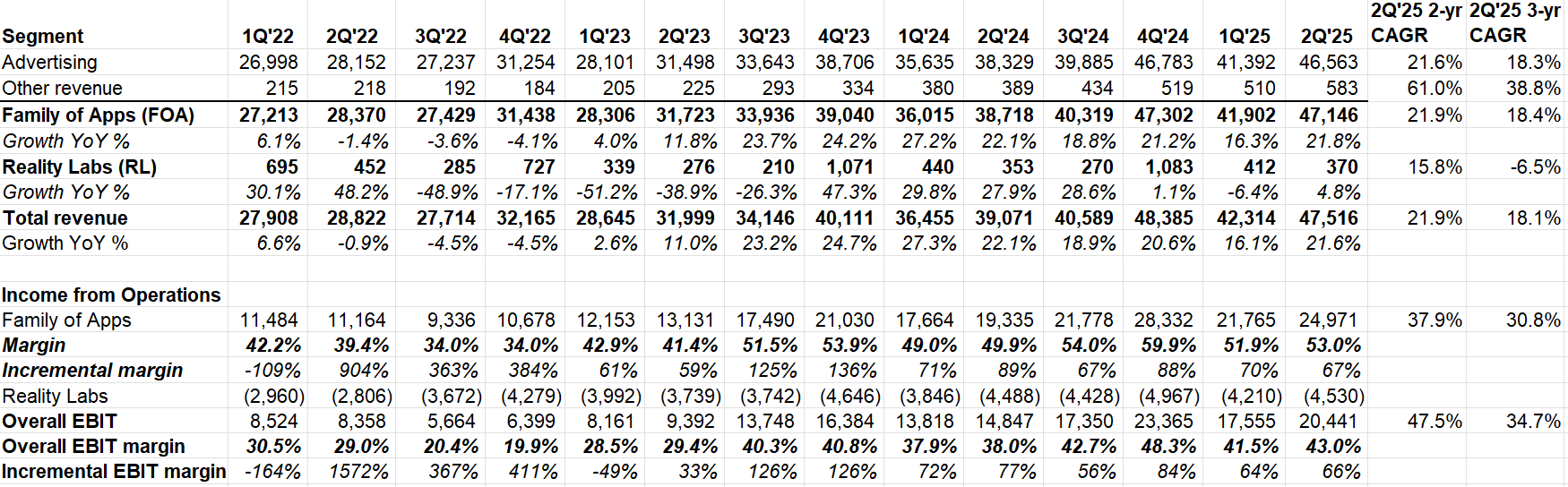

Segment Reporting

Meta initially guided $42.5 Bn to $45.5 Bn revenue for 2Q’25, but generated $47.5 Bn revenue. Overall 2Q’25 revenue was +22% YoY (vs consensus estimates of ~15% YoY and ~19% FXN in 1Q’25). This is a sizable beat!

“Other revenue” accelerated to ~50% YoY, driven by Meta verified subscriptions and WhatsApp messaging. This revenue line item has been growing at a rapid pace for the last couple of years, but it is still a rounding error in FOA revenue. Business messaging is gaining traction even in the US:

we're seeing good momentum in business messaging, particularly in the U.S., where click to message revenue grew more than 40% year-over-year in Q2. The strong U.S. growth is benefiting from a ramp in adoption of our website to message ads, which drive people to a business's website for more information before choosing to launch a chat with the business in one of our messaging apps.

FOA continued to post >50% operating margins while maintaining ~60% (or more) incremental margins for the last 13 consecutive quarters. Between 2Q’22 to 2Q’25, Meta added ~$19 Billion incremental quarterly revenue at an eye-watering ~74% incremental operating margin!!

For Reality Labs, another quarter of $4 Billion+ losses. Thankfully, Meta Ray-Ban glasses is a glimmer of hope that all these investments are going to put Meta in a pole position if AI glasses do gain wide adoption which I am pretty optimistic about. From the call:

The growth of Ray-Ban Meta sales accelerated in Q2, with demand still outstripping supply for the most popular SKUs despite increases to our production earlier this year. We're working to ramp supply to better meet consumer demand later this year.

Superintelligence

Meta has been on the constant news about their recruiting efforts in building the Superintelligence team. I will let Meta management speak for themselves on this topic. It’s a long excerpt from various parts of the call but it is important enough that I’m quoting so much:

Over the last few months, we've begun to see glimpses of our AI systems improving themselves. And the improvement is slow for now, but undeniable and developing superintelligence, which we define as AI that surpasses human intelligence in every way, we think, is now in sight. Meta's vision is to bring personal superintelligence to everyone, so that people can direct it towards what they value in their own lives. And we believe that this has the potential to begin an exciting new era of individual empowerment.

A lot has been written about all the economic and scientific advances that superintelligence can bring, and I'm extremely optimistic about this. But I think that if history is a guide, then an even more important role will be how superintelligence empowers people to be more creative, develop culture and communities, connect with each other and lead more fulfilling lives.

To build this future, we've established Meta Superintelligence Labs, which includes our foundations, product and FAIR teams as well as a new lab that is focused on developing the next generation of our models. We're making good progress towards Llama 4.1 and 4.2, and in parallel, we are also working on our next generation of models that will push the frontier in the next year or so.

We are building an elite, talent-dense team Alexandr Wang is leading the overall team, Nat Friedman is leading our AI Products and Applied Research, and Shengjia Zhao is Chief Scientist for the new effort. They are all incredibly talented leaders, and I'm excited to work closely with them and the world-class group of AI researchers and infrastructure and data engineers that we're assembling.

At a high level, I think that there are all these questions that people have about what are going to be the time lines to get to really strong AI or superintelligence or whatever you want to call it. And I guess that each step along the way so far, we've observed the more kind of aggressive assumptions, or the fastest assumptions have been the ones that have most accurately predicted what would happen. And I think that, that just continued to happen over the course of this year, too.

And so I've given a number of those anecdotes on these earnings calls in the past. And I think, certainly, some of the work that we're seeing with teams internally being able to adapt Llama 4 to build autonomous AI agents that can help improve the Facebook algorithm to increase quality and engagement are like -- I mean, that's like a fairly profound thing if you think about it. I mean it's happening in low volume right now. So I'm not sure that, that result by itself was a major contributor to this quarter's earnings or anything like that. But I think the trajectory on this stuff is very optimistic.

And I think it's one of the interesting challenges in running a business like this now is there's just a very high chance it seems like the world is going to look pretty different in a few years from now. And on the one hand, there are all these things that we can do, there are improvements to our core products that exist.

And then I think we have this principle that we believe in across the company, which we tell people take superintelligence seriously. And the basic principle is this idea that we think that this is going to really shape all of our systems sooner rather than later, not necessarily on the trajectory of a quarter or 2, but on the trajectory of a few years. And I think that that's just going to change a lot of the assumptions around how different things work across the company.

I think that for developing superintelligence, at some level, you're not just going to be learning from people because you're trying to build something that is fundamentally smarter than people. So it's going to need to learn how to -- or you're going to need to develop a way for it to be able to improve itself.

So that, I think, is a very fundamental thing. That is going to have a very broad implications for how we build products, how we run the company, new things that we can invent, new discoveries that can be made, society more broadly. I think that that's just a very fundamental part of this.

In terms of the shape of the effort overall, I guess I've just gotten a little bit more convinced around the ability for small talent-dense teams to be the optimal configuration for driving frontier research. And it's a bit of a different setup than we have on our other world-class machine learning system.

So if you look at like what we do in Instagram or Facebook or our ad system, we can very productively have many hundreds or thousands of people basically working on improving those systems, and we have very well-developed systems for kind of individuals to run tests and be able to test a bunch of different things. You don't need every researcher there to have the whole system in their head. But I think for this -- for the leading research on superintelligence, you really want the smallest group that can hold the whole thing in their head, which drives, I think, some of the physics around the team size and how -- and the dynamics around how that works.

Zuck’s voice also wavered a bit when asked about open source. To be fair, Zuck was always clear that he’s not making any promises that Meta will always open source everything, but my guess is if the Superintelligence team delivers, Meta’s open source approach may look somewhat similar to what Google and OpenAI have been doing. You can even argue by potentially not choosing to open source their most advanced model, Meta may allude that they are quite confident of their internal talent pool to stay at the frontier. We’ll see how their tone evolves over time.

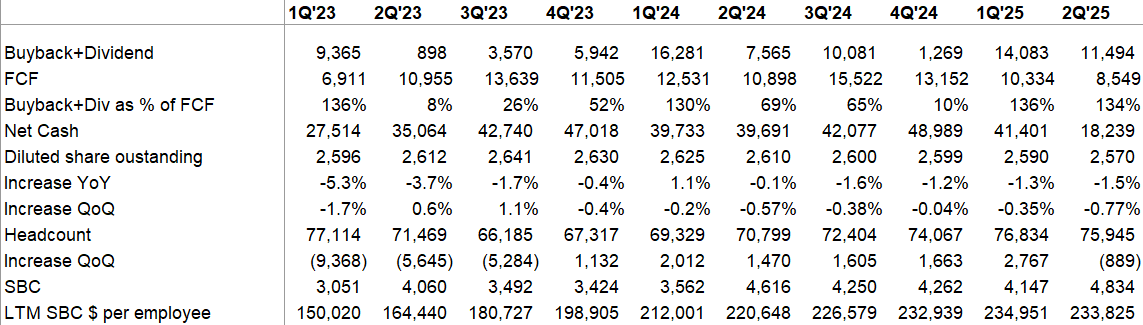

Capital Allocation

For the second consecutive quarters, Meta returned more capital to shareholders than FCF they generated. That and thanks to their investment in ScaleAI (wouldn’t surprise me if they take an impairment charge a couple of years down the line here), their net cash balance was only $18 Billion. At this rate, Meta may have net debt position by sometime next year.

While they’re doling out eye-popping offers to marquee AI researchers, headcount actually declined QoQ. Meta highlighted “performance related reductions” to explain the decline. I suspect this may remain a theme for foreseeable future in every big tech company as headcount becomes increasingly more scrutinized in their budgeting than perhaps anytime in the 2010s.

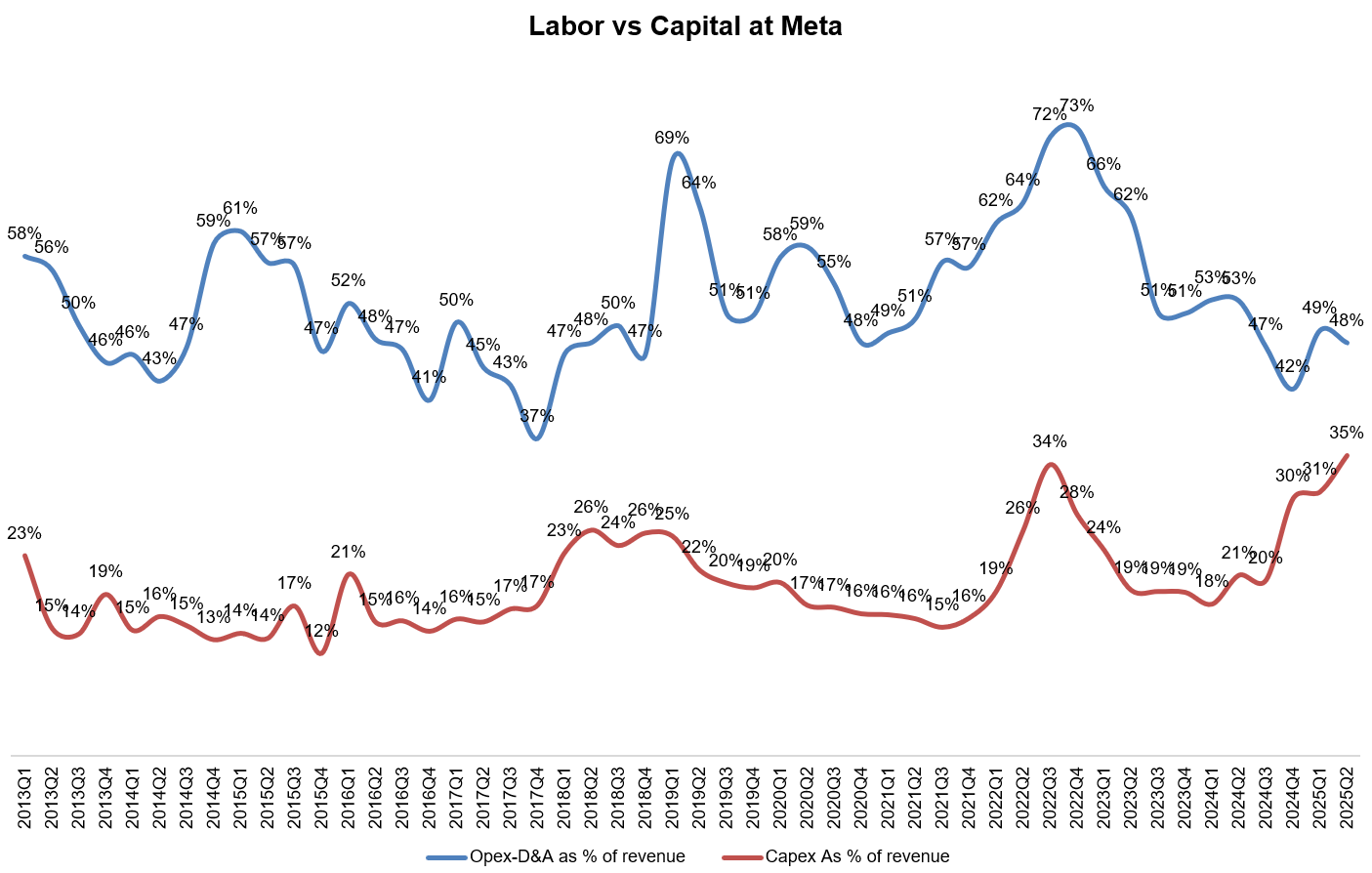

Capex and Opex

Speaking of headcount being increasingly more scrutinized, just take a look at the below chart. I looked at total opex of Meta in each quarter since 1Q’13 and subtracted Depreciation & Amortization (D&A) expenses to get a pretty good proxy for headcount related expenses i.e. “labor”. I calculated this number as % of revenue and then compared with capex as % of revenue in each of those quarters. Notice how close these two lines are getting. Given what Meta has been hinting for capex in 2026 (discussed more below), it may not be too long before these lines meet. It is stunning to look at this chart; I’m not quite a believer of AGI mumbo-jumbo, but I gotta say if we were indeed heading towards AGI, this is what this chart would look like!

Regulation

Europe can still derail the Meta party sooner than you think although I don’t lose sleep over it as I think Meta has more leverage in this tussle, especially under the current US administration. It can also help that Europe basically is making up rules arbitrarily to hurt US tech companies on which their own citizens remain deeply dependent. From the call:

“we continue to monitor an active regulatory landscape, including the increasing legal and regulatory headwinds in the EU that could significantly impact our business and our financial results. For example, we continue to engage with the European Commission on our Less Personalized Ads offering or LPA, which we introduced in November 2024 and based on feedback from the European Commission in connection with the DMA.

As the commission provides further feedback on LPA, we cannot rule out that it may seek to impose further modifications to it that would result in a materially worse user and advertiser experience. This could have a significant negative impact on our European revenue as early as later this quarter. We have appealed the European Commission's DMA decision, but any modifications to our model may be imposed during the appeal process.”

Outlook

Meta guided 3Q’25 revenue $47.5 Bn to $50.5 Bn (1% FX tailwind). Consensus for 3Q before the call was $46.3 Bn. So, if they meet the high end of the guide next quarter, consensus is currently behind ~10%. You can see why the stock was up ~10-12% AH yesterday.

They also narrowed the opex range down a bit from $113-118 Bn to $114-118 Bn.

Similarly, capex guide range was narrowed from $64-72 Bn to $66-72 Bn. In both cases, most investors were perhaps a bit surprised that they didn’t expand the high end of the range provided earlier.

However, they did hint at 2026 opex guide which has interesting implications. For opex, Meta mentioned 2026 YoY opex growth will be higher than 2025 expense opex growth rate driven by higher depreciation and employee compensation (those high talent density Superintelligence team are not cheap)! I’m assuming it to be ~25% given 2025 opex growth is going to be ~20-24%.

For capex in 2026, Meta expects “another year of similarly significant capex dollar growth”. Since 2025 capex is ~$30 Bn higher (at mid-point) than 2024 capex, we are probably going to see $100 Bn capex next year!

Remember how mad everyone was back in 3Q’22 call when Meta guided 2023 capex to be $34-39 Bn? Such a quaint time!

Closing Words

Great quarter! I will share some thoughts on valuation behind the paywall later.

I will cover Amazon’s earnings tomorrow and Microsoft’s earnings on Saturday.

CoStar suing Zillow

On my CoStar Deep Dive, I mentioned: “it seems perhaps fair to assume that if you are building any real competitor to any of CoStar’s products, you should expect to be sued unless you yourself go to a great length to ensure CoStar’s IP is being respected by anyone who’s using your products/services. And I’m not sure even that would be enough from being sued by CoStar!”

It certainly wasn’t enough for Zillow as CoStar has decided to sue them. Naturally, I uploaded the lawsuit to NotebookLM and asked it to create a lucid video on the 38-page PDF.