Constraints, and challenges of value capture in the AI race

There are a couple of podcasts I would like to highlight today. First, Acquired interviewed Sierra’s co-founders: Bret Taylor (who is currently Chair of OpenAI board and former Salesforce co-CEO) and Clay Bavor (who was at Google for 18+ years). They have an interesting vantage point; they both made their career in generation defining incumbent tech companies and were clearly doing very, very well and yet chose to leave those careers behind to venture into AI.

I thought the below paragraph is particularly interesting to contextualize Meta’s pivot to building their small and yet high talent density Superintelligence team:

“…the primary constraint I don't think is capital. It starts with the people and there's a small set of people who know how to architect these models, do the pre-training runs, do post-training RL runs and and so that would be the the starting place and then to to your point the capital outlay for building a a data center that can train these multi- trillion parameter count models is just enormous and you have to amortize the cost of the people, amortize the cost of the capital to build out the data centers and then do that, to your point, in a pretty short period of time in order to make the math work. And so I do think there there will be a very small number of these frontier models and research labs producing them. You know, they will optimize all the way down to the memory, the chips, power delivery, and build this highly vertically integrated stack to get as much value out of the model as quickly as possible and at at lowest cost possible. And by the way though, just with all the press around the talent, it's still a rounding error compared to the infrastructure. So I think it's worth keeping that in mind.”

Their point about vertical integration is also a reminder why Google may be way ahead of where all their competitors in the current AI race want to be in a few years. Dylan Patel from Semianalysis made the point in a16z podcast that if frontier model is concentrated in just a handful of model developers that open source models cannot quite match, custom silicon will do better. In this scenario, Google’s deep vertical integration of their AI stack through TPUs will become much more visible.

Another bit that I thought was interesting in the Acquired interview was their point about how they think about creating leverage through AI:

…we always like to say the way we think about an AI first company is we're building a machine to produce happy customers…And I think that's important because it's like if something comes off the assembly line of machine that's malformed, you don't just fix that thing. You say what part of the machine broke to produce the malformed item.

And so just as it relates to, for example software engineering, we have this philosophy like when cursor, which is the most popular co-pilot for software engineers to like write code and now having some sort of more agentic flavors of it, if it produces incorrect code, our philosophy is don't fix the code, fix the context that cursor had that produced the bad code. And I think that's a big difference when you're trying to make like a company driven by AI. So essentially, if you just fix the code, you're not adding leverage. If you go back and say, what context did this coding AI not have that had it had it, it would have produced the correct code. So I don't want to pretend we're perfect here, but that's the way we think about it. I really like thinking of our business as a machine.

In theory, any company should be able to “add leverage” through AI, but given their experience in large companies, they also know AI will require a cultural change which many incumbents will have hard time navigating through. I know they are highly incentivized to point out the incumbents’ weaknesses, but the point does have merit. I do think it would help to have founders or management team at the helm that cast a long shadow in their companies so that they can diffuse plenty of agility to embed AI deep in the organizational workflows. From the podcast:

“…we're a new company. So it's just so easy to do these things at a small scale. I observe just like having everyone in our company, you know, you didn't use chat GPT deep research before your sales meeting? Are you kidding me? Like that's a best practice that everyone should do. Imagine doing that with, you know, 10,000 salespeople, you know, to roll that out. So I think about it a lot and then just having the vantage point of having come from larger I just have a ton of empathy for for lack of a better word like the cultural change management of absorbing these technologies into larger organizations. So we're trying to be the poster child of it and then because we are a partner to so many larger firms. I have a lot of empathy for the challenges of adopting technology into cultures. I think it's really really hard and I have a ton of respect for leaders who are able to do t at a larger scale.”

One big challenge in the AI race, however, is the difficulty of capturing the proportionate value by the model developers. Dylan Patel made this point in the a16z podcast:

I think the main thing is that AI is already generating more value than the spend. It's that the value capture is broken, right? Like I legitimately believe OpenAI is not even capturing 10% of the value they've created in the world already. And I think the same applies to, you know, Anthropic and Cursor and and whoever else you're looking at. I think the value capture is really broken.

Even like internally, I think like what we've been able to do with like four devs with in terms of like automation, like our our spend on Gemini API is absurdly low and yet we go through every single permit and regulatory filing around every single data center with AI and we we take satellite photos of every data center and we're able to label our data set and then recognize what generators people are using, what cooling towers and the construction progress and substation. All this stuff is like automated and it's only possible because of GenAI but and we do it with very few developers and then the value capture that I'm able to generate by selling this data by consulting with it is so high but the companies making it (the model)…they get nothing out of it

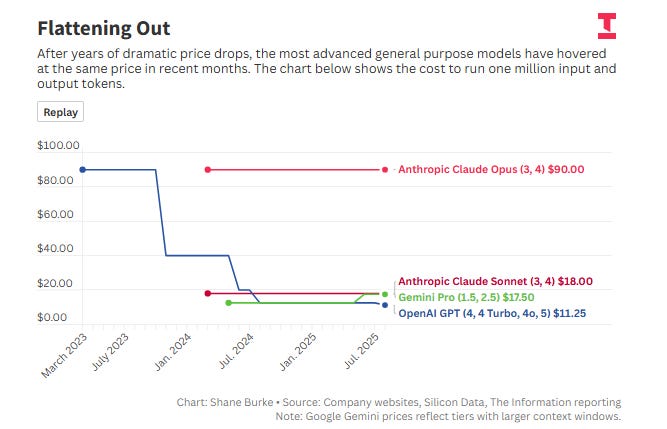

I think many SOTA model developers are gradually waking up to this reality. The Information pointed out yesterday how the token price seems to be stable in recent months compared to the last couple of years. The subscription model just doesn’t seem appropriate in many of the use cases. For example, this Reddit post points out how one dev basically consumed $50k worth of tokens while paying $200 for the monthly subscription. This is, of course, a business model problem. There are business models that perfectly captures the value without much leakage at all. A hedge fund is perhaps epitome of the business model to perfectly capture value (and a lot of times, beyond the value it generated). On the other hand, a newsletter subscription business is usually pretty bad at capturing the value it creates (if it does indeed create any value). Of course, the key difference here is building a newsletter doesn’t cost much whereas we are spending tens of billions to develop SOTA models.

It may be tempting to think it won’t be that difficult to capture value over time. While I have no doubt that SOTA model developers will get better at it, there is a long list of revolutionary technology which had hard time capturing the value. Let me share a personal example. Recently, I opted for “ChatGPT Pro” subscription ($200/month) just to see if there is a noticeable difference between Plus and Pro subscription. One of my family members asked me to run a query that had important career implications for her. After I sent ChatGPT Pro’s response, she was really glad and was telling me that it would probably cost her $1,000 to get such information if not for ChatGPT. At first, I thought even $200/month could be considered incredible value if it can solve at least one such problem in every couple of months. The only problem is when I ran the same query on Gemini 2.5 Pro for which I pay $20/month, it also came up with a very, very good response. ChatGPT Pro was slightly better in some marginal details, but now I was starting to feel $200/month wasn’t worth for those marginal improvement. You see the challenge here? The consumer surplus through these models is quite undeniable but SOTA models remain competitive enough that the value capture can prove to be much, much harder challenge than is currently being appreciated by AI bulls.

In addition to "Daily Dose" (yes, DAILY) like this, MBI Deep Dives publishes one Deep Dive on a publicly listed company every month. You can find all the 62 Deep Dives here.

Current Portfolio:

Please note that these are NOT my recommendation to buy/sell these securities, but just disclosure from my end so that you can assess potential biases that I may have because of my own personal portfolio holdings. Always consider my write-up my personal investing journal and never forget my objectives, risk tolerance, and constraints may have no resemblance to yours.

My current portfolio is disclosed below: