MBI Daily Dose (July 04, 2025)

Companies or topics mentioned in today's Daily Dose: Follow-up on golden age of digital advertising, LLM adoption in enterprise, Anthropic, Money

Happy Fourth of July! It’s a great day to remind myself never to bet against America.

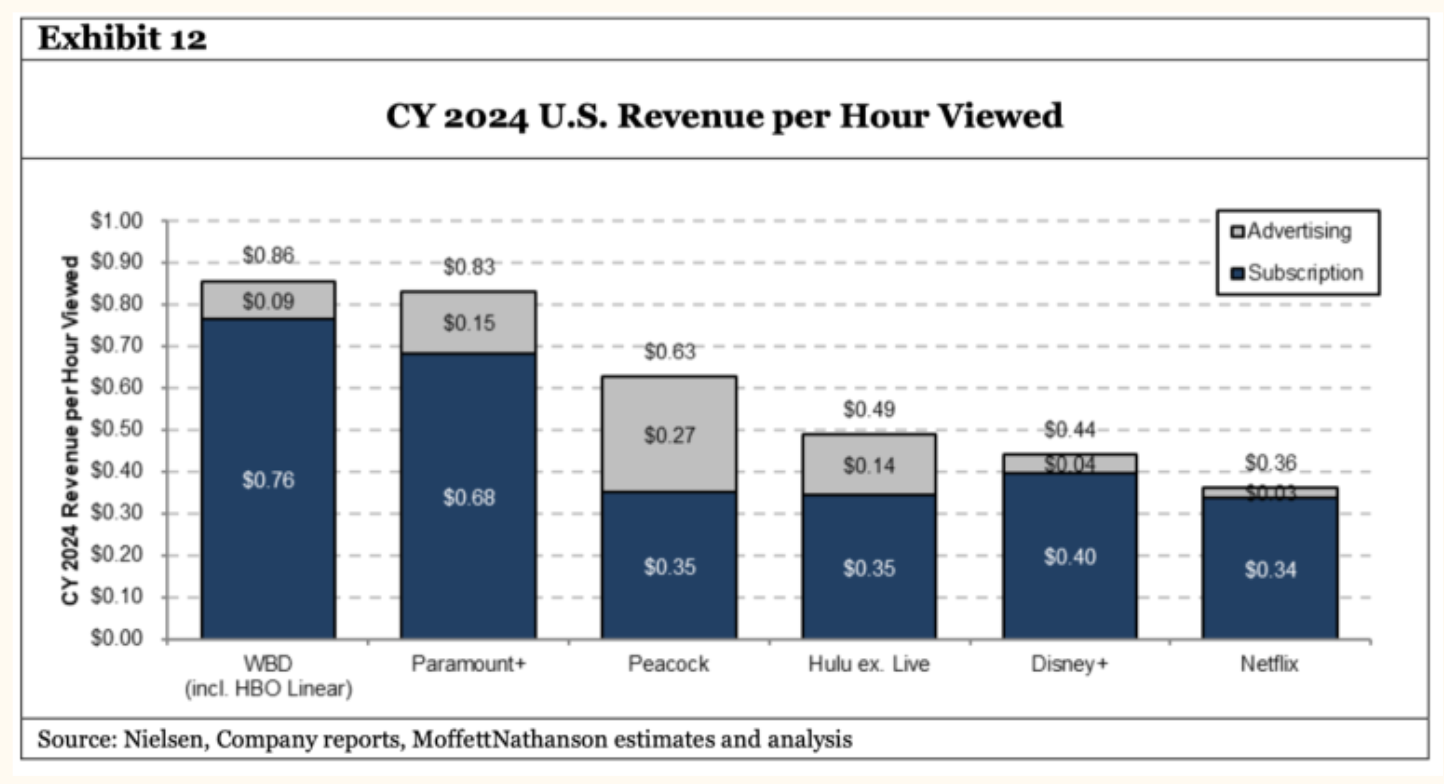

While on my walk yesterday, I was thinking about Eric Seufert's podcast that I mentioned in the last Daily Dose and I was making a connection with an earlier post by Ben Thompson. Ben posted about this chart below (originally by MoffettNathanson) in early 2025 and quoted MoffettNathanson to make a striking point: "The average Paramount+ subscriber is paying double in subscription fees for each hour of viewing pleasure compared to the average Netflix subscriber in the U.S."

As I was noodling what Eric Seufert was saying in the podcast ("As ads become more effective at generating conversions, it makes sense that platforms would show fewer of them per session given an upper bound on consumers’ discretionary income and appetite for various goods") and the point MoffettNathanson analysts raised, I wonder if this dynamic will be even more prominent for scaled social networking apps.

Netflix spreads its fixed content costs over a vast audience, letting it charge less per effective viewing hour while still out-investing rivals; the same principle now favors the largest advertising platforms. As generative-AI targeting and creative tools lift conversion rates, each impression becomes more valuable. For example, Meta, armed with the deepest behavioral dataset and the most liquid auction, can reach any revenue or ROAS goal with fewer ad exposures than smaller networks, then fill the reclaimed feed space with friends’ posts, Reels, or new AI features. A cleaner, more engaging experience drives higher usage, which feeds more data into Meta, attracts higher bids, and reinforces its advantage. Less-scaled competitors, lacking comparable data and auction depth, may feel compelled to keep ad load high to maintain revenue, degrading user experience and falling further behind. Some of this dynamic was already sort of going on, but AI may be bit of a further accelerant.

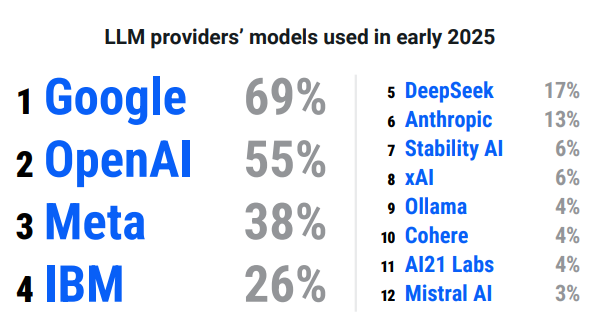

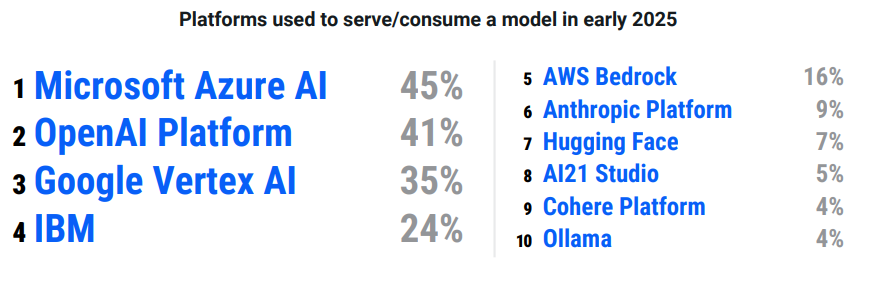

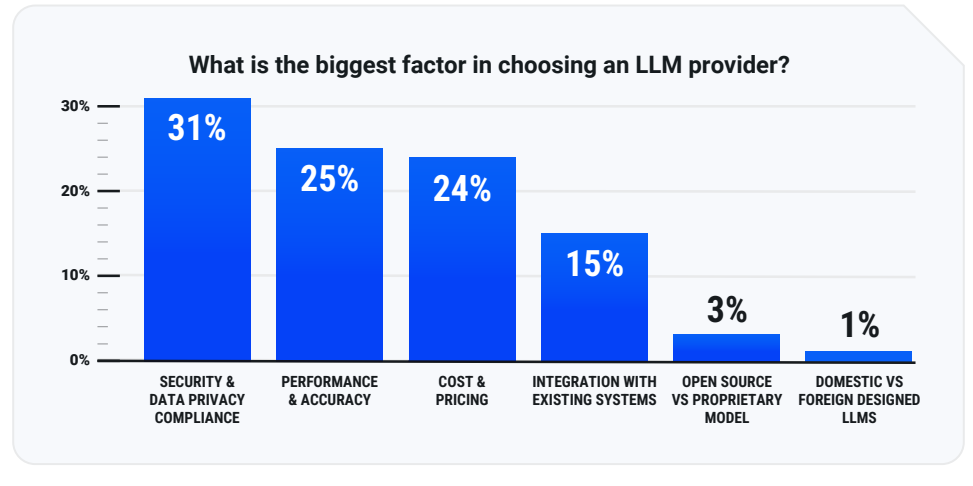

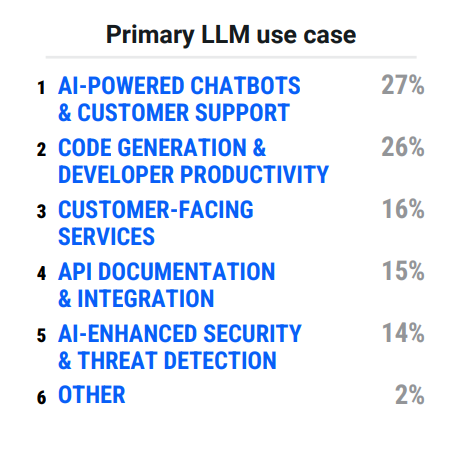

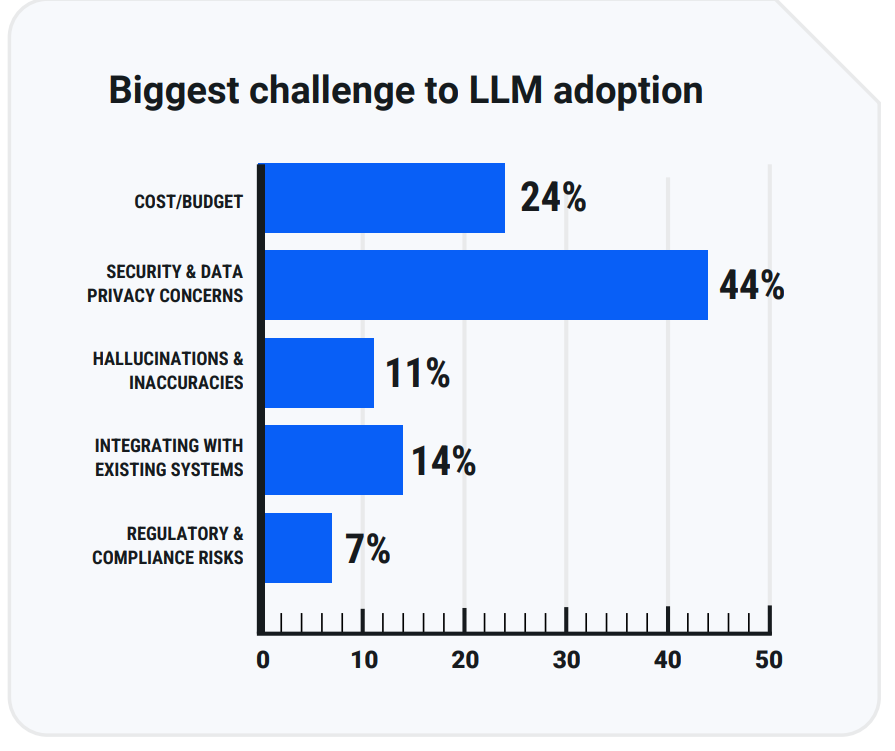

Kong surveyed 550 users, developers, engineers, and IT decision-makers to understand LLM usage/adoption. With the caveat of the sample size and other limitations for these surveys, here's a quick look at key highlights from the report:

Source: all images are from Kong Report

In addition to "Daily Dose" like this, MBI Deep Dives publishes one Deep Dive on a publicly listed company every month. You can find all the 60 Deep Dives, including excel models, here. I would greatly appreciate if you share MBI content with anyone who might find it useful!

Semianalysis mentioned yesterday that Anthropic has some similarity with DeepSeek in that they are both compute constrained and despite popular belief, their partnership with Amazon is still "work-in-progress":

"In the world of AI, the only thing that matters is compute. Like DeepSeek, Anthropic is compute constrained.

Having noticed the success of token consumers like Cursor, the company launched Claude Code, a coding tool built into the terminal. Claude Code usage has skyrocketed, leaving OpenAI’s codex in the dust.

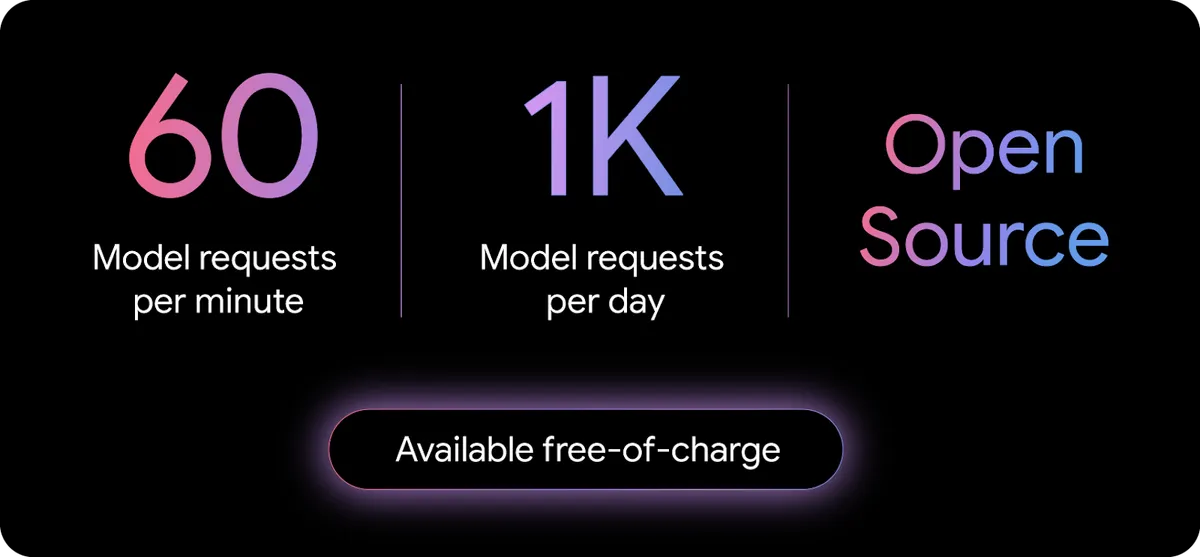

Google, in response, also released their own tool: Gemini CLI. While it is a similar coding tool to Claude Code, Google uses their compute advantage with TPUs to offer unbelievably large request limits at no cost to users.

Anthropic is getting more than half a million Trainium chips, which they will then be used for both inference and training. This relationship is still a work-in-progress as, despite popular opinion, Claude 4 was not pretrained on AWS Trainium. It was trained on GPUs.

Anthropic also turned to their other major investor Google of compute. Anthropic rents significant amounts of compute from GCP, specifically TPUs. Following this success, Google Cloud is expanding their offerings to other AI companies, striking a deal with OpenAI. Unlike previous reporting, Google is only renting GPUs to OpenAI – not TPUs. "

I received an early copy of "The Inner Compass" yesterday which is Lawrence Yeo's first book. I will share my thoughts later once I finish reading. The book is going to be available to buy on July 08th, but you can join the waitlist now.

If you are not familiar with Yeo's work, I can tell you that he once wrote a piece on Money that is, frankly speaking, the best piece I have ever read on anything related to money. Yeo writes on money in a way that took me to places I may have never gone just thinking by myself. If you haven't read his piece: "Money Is the Megaphone of Identity", give it a read.

Current Portfolio:

Please note that these are NOT my recommendation to buy/sell these securities, but just disclosure from my end so that you can assess potential biases that I may have because of my own personal portfolio holdings. Always consider my write-up my personal investing journal and never forget my objectives, risk tolerance, and constraints may have no resemblance to yours.